Are You Ready for AI to Shape Your Reality?

Prof. Dr. Albrecht Schmidt introduces the provocative idea of reality design and argues that we are no longer just designing interfaces, we are shaping how people experience the world and define their realities, one AI-augmented memory, perception, and thought at a time.

“What part of your reality is truly technology-free?”

This question Prof. Dr. Schmidt posed during his keynote at IUI 2025 might sound trivial, but it cuts surprisingly deep. Pause for a moment and ask yourself: how often are we truly without technology?

You might listen to music or podcasts while working out, rely on GPS while hiking, follow AI-generated recipes while cooking, or stream a movie while taking a bubble bath. Digital systems are constantly shaping how we move through the world. These interactions aren’t just conveniences; they’re extensions of how we perceive the world.

It’s in these ordinary moments that the extraordinary shift becomes clear. As AI weaves deeper into daily life, the line between digital tools and human perception becomes increasingly blurry. User experience is no longer confined to screens and buttons. We often unknowingly design the very contours of how people sense, remember, and move through the world.

Schmidt’s provocative concept of “reality design” challenges developers and designers to move beyond menus and buttons and instead think about systems that augment memory, enhance perception, and help us offload complex thinking.

From User Interfaces to User Realities

For decades, the design of interactive systems has focused on usability, efficiency, and reducing friction. Mouse, touchscreen, and command line represented a bridge between human intent and machine execution.

Reality design builds a new bridge. It asks how we interact with machines and how machines alter our perception and behavior in return.

Schmidt argues that technologies increasingly serve as external memory, sensory augmentation, and decision-making assistants. With large language models capable of summarizing, predicting, and suggesting actions, the digital system is no longer a passive tool. It becomes a participant in shaping thought and experience — a personal perception agent.

Whether this sounds exciting or a bit unsettling (personally, I’d say a mix of both), one thing is clear: These types of technologies are no longer confined to science fiction. What once lived in the imaginations of writers and futurists is unfolding in real time.

Memory and Cognition as Design Space

One of the most tangible examples Schmidt explores is human memory extension. Imagine a system that captures every moment of your life and allows you to recall it with perfect clarity. Technologies for continuous “lifelogging“, ambient display feedback, and intelligent memory retrieval are no longer confined to labs. Projects like ReCALL have already explored how digital systems can externalize memory; similar ideas are appearing in commercial wellness and productivity tools.

The implications are profound. Rather than building apps that help people take notes or set reminders, future systems might seamlessly augment human recall. Tools like Rewind.ai, which record and make your digital life searchable, already bring this idea to life. It’s quite mind-blowing if you ask me. Can you imagine how much easier high school would have been if that had existed?

But also scary to think your mother could pinpoint the exact moment in time when she indeed did tell you to do that, whatever that is.

But the potential applications go beyond just the educational setting. They could reinforce or suppress memories based on emotional weight or relevance, provide contextual nudges, and adaptively support decision-making based on personal history. For example, AI companions like Replika already engage users in emotionally aware conversations that evolve, subtly influencing mood, memory, and self-reflection.

Cognition itself becomes a canvas for design.

Superpowers Through Augmented Perception

Schmidt connects reality design with a concept people have always found compelling: superpowers. Not the kind found in comic books, but real, practical enhancements made possible by technology.

Consider selective hearing at a distance, enhanced directional sense while swimming (as explored in Clairbuoyance), or visual slow motion that lets you focus on fast-moving events.

In these designs, AI systems sense intention, predict focus, and adapt feedback in real time. Rather than using a camera to observe the world, users might receive an intelligently filtered reality stream that highlights key moments and removes distractions.

This shift requires a different mindset in both development and research. It is no longer sufficient to ask how to make systems easier to use. Instead, we must ask how to amplify what humans can do and how to apply it in real time.

Designing for Delegation and Trust

As AI systems grow more capable, users increasingly delegate tasks and decision-making to them. This introduces a new category of interaction: intervention. People must understand when and how to trust an automated process, when to step in, and how to recover from unexpected outcomes.

Take something as common as navigation. If your GPS suggests a strange detour, do you blindly follow it (straight into a lake, perhaps) or trust your own instinct? Now replace that map with a system that chooses your media, filters your memories, or subtly rewrites your calendar. The stakes escalate quickly. Designers must create systems that make it clear why a decision was made, when it can be overridden, and what information is being used.

These are not just technical problems; they are ethical design questions. Memory systems must include boundaries. Perception-augmenting tools must guard against overload or manipulation. As AI becomes more ambient and predictive, trust must be earned, not assumed.

Benchmarks for these systems cannot rely solely on technical accuracy. They must include human judgment, behavior, and long-term impact.

Schmidt points out that traditional machine learning benchmarking methods leave humans out of the loop. In a human-centered AI paradigm, this is no longer acceptable. Metrics must reflect real-world utility and user experience.

The Placebo Effect of Intelligence

One of the keynote’s most sobering observations is the “placebo effect” of AI. Users often perceive systems as more capable or intelligent simply because they label themselves as AI-powered. This can lead to over-trust, reduced critical thinking, and even the acceptance of biased or flawed output.

Researchers have shown that users are more likely to believe and follow suggestions when told they come from an intelligent system, regardless of whether the output quality justifies the trust. In memory augmentation, recommendation systems, and learning assistants, this can lead to long-term changes in behavior and cognition. And honestly, we’re all guilty of sometimes letting AI do the work for us without double-checking (that long overdue response to a client or the summary of a dreaded 2-hour meeting).

It’s just become too convenient to hit the generate and send buttons.

As reality design becomes more powerful, designers must take ethical responsibility. Transparency, explainability, and user empowerment must be built in from the ground up.

A Call for Methodological Rethinking

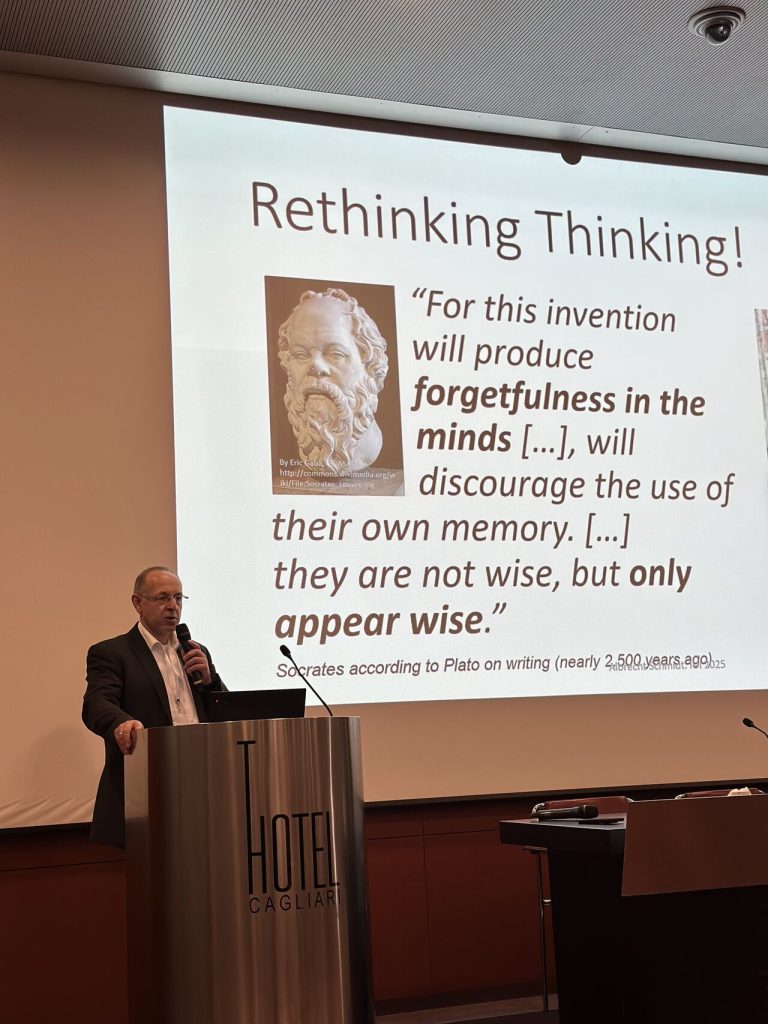

Schmidt’s keynote repeatedly returns to a single provocative idea: we must rethink thinking. Just as writing changed the course of human civilization, AI systems that support thought, memory, and perception are poised to transform how we relate to information, creativity, and decision-making.

Reality design is not a metaphor. It is a literal shift in responsibility for how people construct meaning and make life choices.

For designers and engineers, this requires a new methodology. Interface mockups and usability heuristics are not enough. Teams must study how systems influence memory formation, trust dynamics, and cognitive load. They must explore hybrid intelligence models where humans and AI collaborate, not compete.

They must ask: What tasks do humans still do better than AI? How can systems elevate human insight rather than replace it?

When Convenience Becomes Control

But with this expanded power comes a deeper risk, one that Schmidt calls out bluntly. As AI systems become more embedded in our daily lives, the biggest threat may not be technological failure, but something more human: the loss of motivation to think, remember, or decide for ourselves.

When machines remember everything, predict our needs, and even finish our sentences, what happens to curiosity? To learning? To critical thought? It’s not hard to imagine scenarios where this convenience slides into dependency. Think of a world where memory is outsourced so completely that people forget how to form their own. Or where personalized news and AI companions quietly nudge belief systems in subtle, manipulative directions.

Schmidt warns that the more seamless these tools become, the more invisible their influence and the easier it is to shape not just experiences, but perspectives. In this light, reality design starts to look less like a creative opportunity and more like a moral imperative.

Reality is a Design Decision

“We shape our reality; thereafter it shapes us.”

In closing, Schmidt paraphrases Churchill: “We shape our buildings; thereafter, they shape us.” As human-computer interaction moves beyond screens, the challenge is not just to make systems more intelligent but also to make them more humane.

Reality design gives us the opportunity to empower users in new ways. But it also demands we take responsibility for the experiences we create and the minds we shape.

In the age of human-centered AI, what you build doesn’t just help users get things done. It defines how they see the world.

So, it might be time to ask yourself: how are you shaping the world for others? These technologies don’t design themselves. Every prompt, every interface, every AI-assisted moment is the result of human choices, yours included.

AI is already shaping your reality. The real question is: Are you actively shaping it back?

How do you feel about AI shaping your reality on a scale from 1 (I’m so excited, and I can’t hide it) to 10 (Off-grid living, here I come)?